To become a Data Scientist and learn data science, it is really necessary to understand different kinds of Machine learning algorithms. To understand those algorithms, I recommend you read this UNDERSTANDING MACHINE LEARNING ALGORITHMS before moving on to coding.

Related Post: UNDERSTANDING MACHINE LEARNING ALGORITHMS

As told in the last blog, practical knowledge is also really important along with theoretical one. The sole purpose of this is to get your hands dirty and start doing analysis and discovering patterns on your own in a very basic and easy way.

GitHub link is given at the bottom of the page.

Let's begin.

PROBLEM STATEMENT:

To predict whether a bank should give a loan to a person or not. This is a CLASSIFICATION type of problem. To know more about classification and other types of algorithms, refer to the above-mentioned link.

In this project, we will use some algorithms which will help us predict our target variable.

PROBLEM SOLUTION:

First of all, you need to import all of the libraries which are used in this project.

After successfully loading the libraries our first step is to load our data. The CSV file (dataset) is present in the GitHub repository (link at the bottom). You can download it from there and get started.

You can load the dataset in your own local variable using the read_csv method provided by Python.

After loading the dataset, our main working starts. Now we will analyze the dataset and clean it if it has irrelevant or missing values.

- The foremost step in data analysis is to find out NULL values. Using the below-mentioned method, we can check how many NULL values are present in the dataset. train.isnull().sum() - NULL values in each column.

- train.isnull().sum() - NULL values in the entire dataset.

- Drop ROWS which contains NULL values

- Drop COLUMNS which contains NULL values (if the column(s) are irrelevant)

- Fill NULL values by using the mean/median in the integer data type column or mode in categorical data type.

Here, I have dropped the rows which contain NULL/missing values.

- dropna() - drops null values from rows/columns. The subset parameter in dropna() tells the method from which rows it has to drop NULL values. It is used when you don't want to drop all NULL values instead you want to drop some NULL values and fill some NULL values with either mean or any other value.

- fillna() - fills null values in specified columns with specified values.

After dropping and filling NULL values, we can see that it shows that the dataset has 0 NULL values now and it is cleaned. Now it's very necessary to check whether the data types are assigned correctly to each column or not.

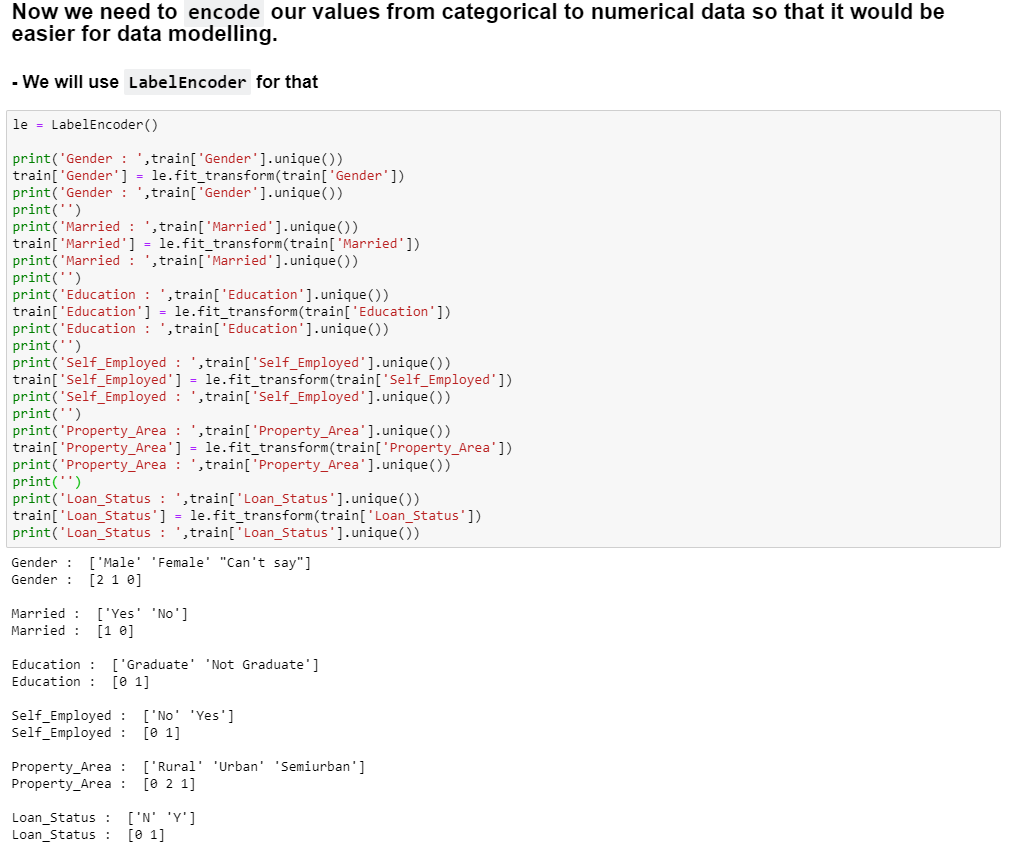

Now, we can see that all of the values are changed to the numerical data type. To avoid any future errors of data type mismatch, we will convert the data types of each column once according to the values which they hold.

Now we have analyzed the dataset, we have enough knowledge about the dataset. After this step, we can move to the next step which is a very interesting step.

The next step is Data Visualization. We can create beautiful insights/patterns/graphs from the dataset to understand it more and convey our findings.

After visualizing the dataset, we can model the dataset and predict our model. I have used different classification algorithms.

Now, if you have noticed the accuracy of the random forest algorithm is very low as compared to Naive Bayes and other algorithms. It is a very important and rigorous task to choose the best algorithm for your model. It is not a hit and trial method. You will learn when you will start working and understanding the working of algorithms.

There is a possibility that I have made a mistake while coding, please comment on your suggestions and ask your doubts. I would be happy to answer and interact with you regarding the project or any other relevant topic. Thanks for reading.

Also, subscribe to the Telegram channel for more updates.

HAPPY CODING future Data Scientists!

2 Comments

Nicely explained, will try to implement through this, Thanks

ReplyDeleteNice that

ReplyDeleteThank You!